Google sometimes does phrase matching even if the search query isn’t in quotes. They’ll also include words that are traditionally considered search operators and stop words if it’s part of a popular phrase. It seems Google is getting increasingly better at understanding language.

Understanding how Google indexes and uses a phrase based analysis of language, and semantic analysis of these terms, can help you better understand on-site targeting and link building.

Disclaimer:

I don’t work at search engines, so I can’t make any concrete claims about how search engines work. I just read a lot of papers and patents. You can learn more about phrase indexing here.

Understanding how search engines might discover and use phrases can help you move beyond “exact match” targeting in both content and link building.

There has been a lot of conversation lately on the change in the weight of exact match anchor text but I don’t think it is as simple as Google turning down the the knob on exact anchors. Panda has shown us that Google has significantly improved its ability to analyze content at scale. This may be resulting in a better understanding of language usage across the net.

The Problem With a Phrase List

One of the common problems with understanding phrases is building a list of known phrases in a lexicon. Not only is the number of phrases so large that human creation doesn’t scale, but language is extremely dynamic with phrases entering and leaving the lexicon all the time. This means manually building a phrase list is not a viable solution.

To do this at scale, search engines need to develop algorithmic processes for building and maintaining lists of known phrases and their relationships. I think the methodology for doing this has been in place for a long time, but Google is likely iterating on this, discovering methods to do it faster and with fewer resource demands.

Ways to Build a Phrase List

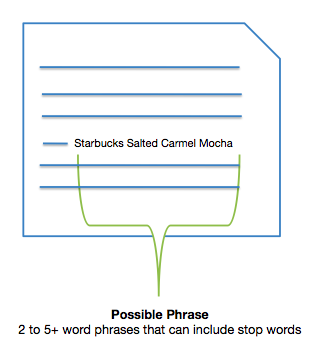

Search engines are able to start building an index of possible phrases as they crawl and discover sets of words that form phrases. As these phrases are discovered via a crawl, they are entered into a list of potential terms.

There is likely no need to build this list out of the entire document set of the internet. Doing so with some subset (a large subset) would allow a search engine to build a significant set of phrases.

Reviewing Occurrence in Crawled Document Set

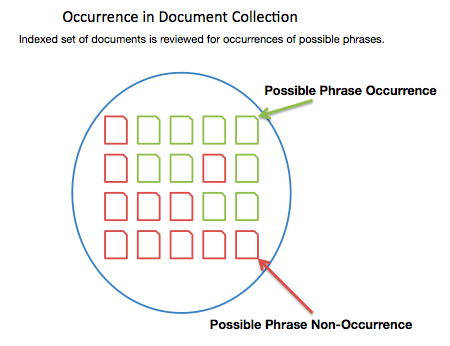

Determining if these potential phrases are real phrases is done by looking at their presence across a large document set from a crawl of the internet. Common phrases in the lexicon will appear over and over on many pages in a document set.

Finding Good Phrases

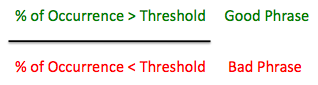

Legitimate phrases in a lexicon will appear as outliers amongst the set of possible phrases discovered in a crawl set. A threshold can be set for which any phrase that exceeds a specific rate of occurrence is deemed a “good” phrase.

This process for building a phrase set is scalable and allows the system to learn over time as phrases come and go from the lexicon. As the document set is recrawled over time, this phrase list can be rebuilt or appended with more temporal data.

Semantics: Related Phrases

I think determining phrase relationships at scale is where search is going to continue to get very interesting. Being able to better understand phrase relationships allows search engines to execute much more sophisticated language analysis (well beyond naive analysis like term frequency and keyword density).

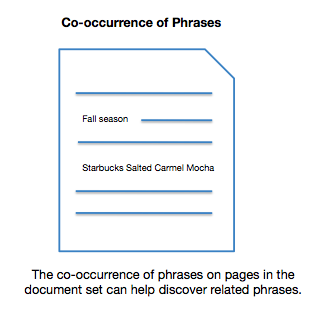

Co-occurrence of phrases in the document set can start to show relationships between phrases in a lexicon. The same way sites can be analyzed for co-occurrence and closeness on the link graph, so can phrases.

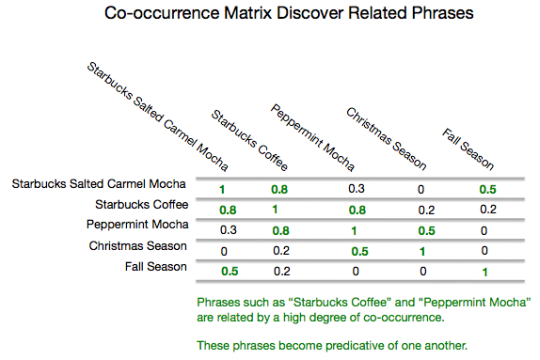

In the image above, the phrase “fall season” and “starbucks salted carmel mocha” appear on the same page, as Starbucks introduced this new drink along with other fall season drinks, such as the Pumpkin Spice Latte.

In the image above, the phrase “fall season” and “starbucks salted carmel mocha” appear on the same page, as Starbucks introduced this new drink along with other fall season drinks, such as the Pumpkin Spice Latte.

Looking at the repeated co-occurrence of these phrases across many documents can show the degree of relationship between two “good” phrases.

Co-occurrence Matrix

A co-occurrence matrix can be built out for many different possible related terms, which will demonstrate the relative relationship between sets of phrases.

A matrix, such as the one above, would describe the relative co-occurrence of a set of potential related terms, and the degree of that relationship. This would show a strong relationship between the “starbucks coffee” and “peppermint mocha” phrases. These first and second order relationships can be used for wide-range of semantic analysis.

And you can bet Google in throwing all kinds of linear algebra / vector analysis into this type of stuff.

The Impact of Phrase Based Indexation on SEO

There are number of items that change under a phrase based index. The most fundamental is that search engines can start building keyword tables for documents on a phrase level, as a opposed to a broad match keyword level. Once these phrases, and their relationship is understood, this information can be used to improve relevancy scores, link analysis, and spam detection.

Think about domain level link analysis vs. page level link analysis. A bit like that, but with words. The whole system can get rebuilt using phrases instead of words as the base discrete unit.

Content Relevancy

One common short-coming of SEO is that a page my not appear relevant if it doesn’t include the words in a search query on a page. It is a common best practice that to target a term you need to include it at least once on the page, such as in this example of a perfectly optimized page.

This doesn’t have to hold true in a phrase based, semantically aware index and ranking system.

When optimizing a page, we work hard to include a keyword phrase we’re targeting in the title or page copy. However, this understanding of content by the search engines is extremely simplistic. Search engines have improved by understanding relationships between words, such as sneaker and shoe, but phrase indexation improves this sophistication.

Let’s look at an example of how this might work.

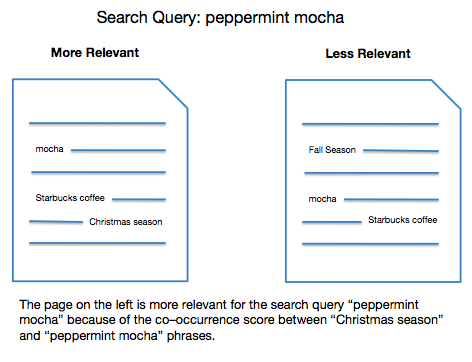

In the images above, search engine encounter a problem when gaging relevancy for the term “peppermint mocha” because neither page uses the phrase anywhere on the page. In addition, both pages use the broad match term “mocha” making them equally relevant on basic content analysis.

However, the co-occurrence matrix before shows a strong relationship between the terms “christmas season” and “peppermint mocha” from reviewing pages in the document set. This relationship means that Christmas season is more predictive of “peppermint mocha” than the content in the page on the right. Therefore, the page on the left could receive a higher content relevancy score.

This allows search engines to step outside the short-comings of their traditional analysis methods and gauge keyword relevancy without the appearance of the keyword.

Link Analysis Gets More Complex

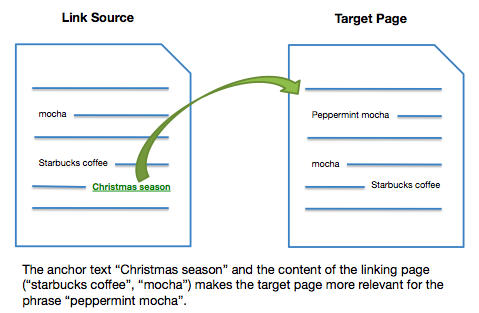

This relationship analysis can be carried over to a document’s anchor text tables. The inclusion of anchor text that may have previously appeared irrelevant, now creates an additional dimension on which relevancy can be scored.

The target page on the right is targeting the phrase “peppermint mocha” and has followed best practices of including the keyword in the body copy. It has also supported this term by using related phrases and variations of this term. However, the page’s backlinks includes anchor text such as “christmas season” which, using simplistic anchor text analysis, would not increase this document’s relevance for the targeted phrase.

However, the phrase “christmas season” has a good co-occurrence with the phrase “peppermint mocha” so they are predictive of one another. Anchor text analysis such as this could explain why exact match anchor text appears to carry less weight, not because search engines simply turned down the factor because it’s bad, but because they’ve added new, additional types of analysis into the mix.

Pages that receive less optimized anchor texts, due to a more natural non-optimized acquisition of links, are now likely better judged on their true relevancy.

Using Co-occurrence for Spam Detection

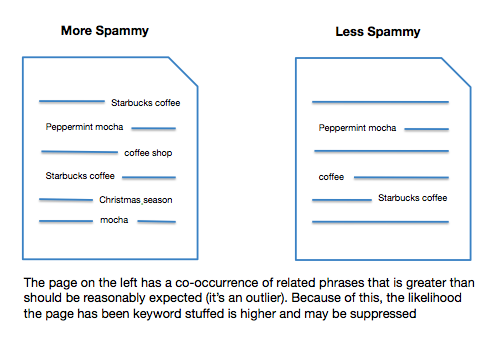

Understanding phrases is a good improvement, because the phrase set, and relationship data, can be used to decrease the relevancy of potential of spam document in addition to improving overall relevancy scores.

Using phrase semantic analysis across the document set, search engines can gauge a reasonable level of co-occurrence to expect for phrases. They can use this data to discover outliers, which may indicate on-site spam techniques.

Pages that are using aggressive optimization techniques, such as stuffing content with related terms, may appear as outliers. Spam techniques such as keyword density and compression analysis could easily detect keyword repetition, but they fall short on detecting related term stuffing.

The document on the left, for example, is not keyword stuffed directly by increasing term frequency / density, but is stuffing the content by including an excess of related supporting terms. The degree of this co-occurrence of terms may be well beyond what is “natural” based on the analysis of the document set. As a result, it is easier to determine that this page has been “optimized” by a human to target specific terms. However, the page on the right is still well targeted, but is written more naturally. As a result, search engines can suppress rankings of the document on the left.

Final Thoughts

Most of this post isn’t “new” since the paper from Google came out several years ago, but I think the sophistication of Google has moved far past ideas of just individual factors being turned up or down. There has been a lot of talk about the changes in exact anchor text, but I think we’re just seeing increased sophistication. We’ll likely see the recent content improvements and the machine learning improvements Panda (the engineer) made being applied to other hard to solve problems at Google.

Nice post, Justin. The expected related phrases technique most likely used by Google would be Latent Semantic Analysis. Microsoft holds a patent on scalable LSA. Google talks about this briefly in their patent on “Systems and Methods for Identifying Similar Documents”although they do not outline exactly what technique they use for computing “bigram probabilities”.

I think there is a lot of potential in this area for SEOs to improve content by using topic-modeling to identify missed opportunities for relevant language in an article or post. More on that later 😉

@Russ

Yeah, I’ve read a bit on LSA. I remember a while back when SEOmoz thought it was well correlated.

It’ll be nice as well when search engines get better are understanding grammatical and language rules, so they know what is being said, over just the inclusion of a keyword.

There are a lot of really nice papers on similar / duplicate content analysis. I might try to write something on it.

Love your stuff!

I would agree that relationship analysis and keyword/phrase co-occurrence is gaining traction and becoming more sophisticated. However, I have never thought of using that for spam detection.

Content writers now have more to think about when writing in order to avoid keyword stuffing.

Also, I think as this is becoming more sophisticated, it will help to determine user’s intent when searching for keywords/phrases and search engines will be able to deliver more relevant results. Thus, cutting down on spam and better matching user’s intent.

Hi,

Great article – I don’t often post comments but it was very easy to read, especially considering the complexity of the subject.

It also pushes me even more towards using content writers that don’t know SEO and are knowledgeable / experts in the given subject so that content is 100% natural. This is what Google is after and they can obviously tell when you are writing content only for SEO purposes.

Thanks,

Josh

There a quite a lot of really interesting papers on spam detection. I think some of them work better in theory than reality. I’d imagine resources and scale a big limiting factor.

I think over time, search engines will continue to reward more naturally written content.

Hi Justin,

Thank you for the post. A lot of information to digest there but your explanation is crystal clear. I agree content writing and link building will become more sophisticated..

Love the analysis. I wasn’t thinking along those lines with Panda but your logic makes sense in terms of both anchor text phrase matching and potential spam detection. Good stuff!

Definitely interesting analysis. Whatever Google did, many of the results strike me as strange these days. Possibly due to semantics, as you suggested.

Great post – do you have a link for that paper you referenced in your final thoughts? It seems logical that engines would now use phrase co-occurrence for spam detection, but I haven’t seen anyone else make that connection yet.

As you teased, i would also love to hear your thoughts on duplicate content detection.

Love dis article. I do not think G is going to analyze all the phrase matches of incoming links in this decade.

@Justin:

It may be nice when search engines get a better understanding of grammatical and language rules, but keep in mind that the writing style of every individual differs a lot. Sometimes, it may misjudge many things as errors.

I would say it will be nice when G focuses more on identifying amount of ‘frequent’ re-visits to a blog/website to determine the quality of a blog.

on Uniqueness – I would definitely agree to your comment that G is taking measures to reward ‘rich’ content that is unique.

Hit the nail on the head with this one. Google is constantly changing to better understand what our content is about. I think it’s fantastic that Google is beginning to reward great rich content a lot more frequently. It’s great for us copywriters out there. 🙂