To set the stage, yesterday Google announced a new “Freshness” Update, which had an effect on 35% of all queries. Barry wrote a great post about it on Search Engine Land and Rand and Mike followed up with a great Whiteboard Friday documenting some of the early observations from SEOs. But beyond the news of the update, I’d like to look at some of the potential methodologies used by search engines to implement this update.

This is quite a long post, so you might want to grab some coffee. Also, the standard disclaimer that I don’t work for search engines, I can’t guarantee the validity of the methodologies outlined in this post, but it’s all based on search engine patents and papers. I spent 6 hours reading so you don’t have to.

Inception Date

To start, there are two main buckets of content

- Stale Content – Content not updated for a period of time

- Fresh Content – Content updated more recently / frequently, including new content

To understand how this content is scored, search engines look at a document’s inception date. This date is an open concept to include both date and time. There can be two different types of inception scores.

- The inception date of the document (crawled,indexed, etc.)

- The inception date of the document first appearing in search results

In short, a document can be discovered and have one inception date, but never show up in a relevant query for a longer period of time (the second type of inception date).

The inception date of the document can be found by either by:

- The date the document was first crawled

- The date a link to the document was first discovered

The papers talk about various ways to look at inception date, but there is no definitive way to know which one they might use. They could use multiple types, depending on the scenario the algorithm is processing.

Search engines can also go as far as setting a threshold before defining the link-based inception date. For example, the threshold could be set to 3 links, so the first two times that a search engine sees a link to a URL, the inception date is not assigned, but once the third link is seen, the inception date is defined. This process could be used in situations such as social media where a link in an update is ignored until it has exceeded a threshold (ie. a certain number of Tweets or a Tweet from a user with certain level of authority). This threshold can quickly filter out a larger amount of useless URLs from being processed.

Google was quoted in the Search Engine Land article:

“one of the freshness factors — the way they determine if content is fresh or not — is the time when they first crawled a page”

Once an inception date is defined, a delta can be calculated between the timestamp of the query and the inception date. This delta is the age of the document. In addition, this delta can be used as a time period for link over time calculations. For example, a document that is 1 day old and has 10 back links may be judged differently than a document that is 10 years old and has 100 back links.

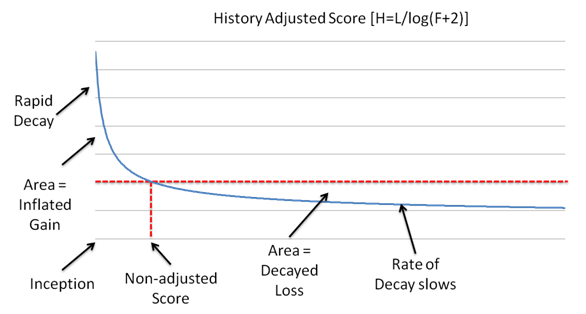

With this information, search engines can use the historical information to weight the score. In the paper Document Scoring Based on Document Inception Date, the following equation was given.

H = L/log(F+2)

H = History Adjusted Score (the “freshness” adjusted score of a URL)

L = Link Score (the “relevancy” scored based on traditional link analysis)

F = Elapsed Time (The delta calculated using inception date)

For the math inclined, you can read more into that, but it comes out to a fairly straight forward decay in link value. We can skip straight to plotting it out.

The result of this function is that a URL will receive a significantly higher weight closer to its inception date. This inflated score rapidly decays in the early period of elapsed time, but the rate of this decay quickly slows down. It’ll eventually reach a point where it’s receiving no promotion or demotion, but as time goes on, it will continue to slowly decay, demoting stale content.

It’s important to note, before getting too concerned, that this type of function may only be applied to queries, and their results, that are deemed recency-sensitive. Results that aren’t recency-sensitive may go on behaving as expected.

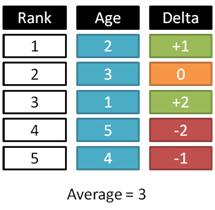

Average Age of Document Set

Another method of resorting based off inception date is to compare every document in a returned set to the average age of all the documents in that set. The set can then be resorted based off the delta between the document’s age and the average.

This analysis might be applied to the top 10, 30, or 50 results returned for a query to resort them based off of freshness.

This analysis might be applied to the top 10, 30, or 50 results returned for a query to resort them based off of freshness.

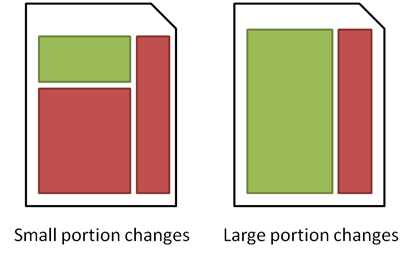

Proportional Document Change

Up to this point, we’ve been assuming a document either changes or it doesn’t, but this analysis can be expanded by looking portions of content on a page that change, while other portions remain static. An easy example is a homepage that pulls in blog content, which changes frequently, while the rest of the page remains unchanged day to day.

Documents are given an update score using a weighted sum function.

U = f(UF,UA)

U = Update score

UF = Update frequency score (how often the page is seen to change)

UA = Update amount score (how much of the visible content, as a percentage, has changed)

Throwing out some test numbers shows how this might work.

Format: U = UF + UA

- 12 = 10 + 2

- 12 = 3 + 9

- 18 = 9 + 9

- 6 = 3 + 3

In short, smaller content areas have to update at a higher rate to get the same value of a larger content area at a lower rate.

In addition, the UA score can add variable weights to different elements. Items such as javascript, boilerplate, and navigation may be given little to no weight. Items of importance, such as titles, body content, and anchor text, may be given more weight. Search engines can also compare UF and UA over a period of time to evaluate the acceleration rate of these two metrics, to see if the portion or rate is speeding up or slowing down.

Query Based Scores

If a phrase, or a group of related phrases, increase in a period of time it may signal a hot or popular query. In this case, the documents related to those queries might be ranked higher in other queries. It’s also a signal to weigh temporal freshness factors more heavily.

Search engines may also look at CTR of a result over a period of time. If an article in a set of results gets a CTR that accelerates in a period of time, its score might be weighted and moved up in the search results. Search engines can also look at how often a stale document is selected over a period of time, and this might weigh a stale document over a fresh document, even for a query that heavily weights freshness.

Link-Based Score

Search engines may also look at the number of links incoming and disappearing, as well as the change in the rate of these to determine if a piece of content is going stale or becoming “fresh”. In which case, the relative weight of these documents can be adjusted. So in short, a stale piece of content, which is good enough to continually gain new inbound links, can compete with a new fresh document.

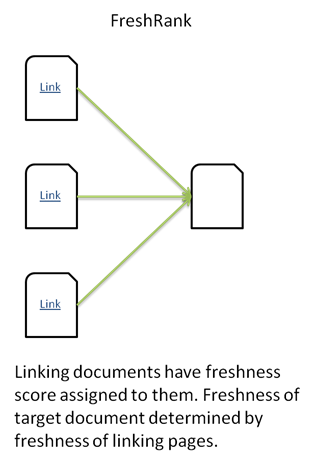

The papers also confirms that links may be weighted based off the freshness of the link. That means a link may count for more or less based on the time since its inception date. They may also be weighted based off the freshness scores (UF/UA) of the page caring the link.

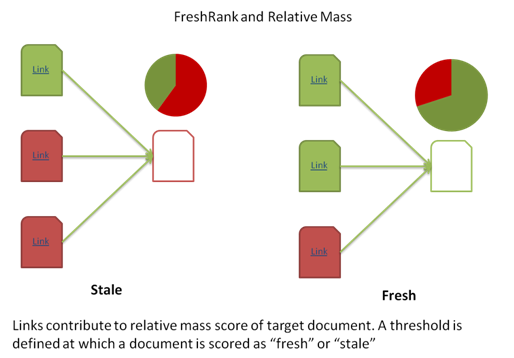

FreshRank

FreshRank is a term I’m coining to describe the behavior of search engines to pass a “freshness” score through links as outlined in the paper Systems and Methods for Determining Document Freshness.

FreshRank scores are used in combination with mass estimation. I’ve talked about mass estimation before in my link spam analysis post, but it is a calculation of a threshold at which point a certain level of contribution will determine a page fresh or stale.

Suppressing Stale Content

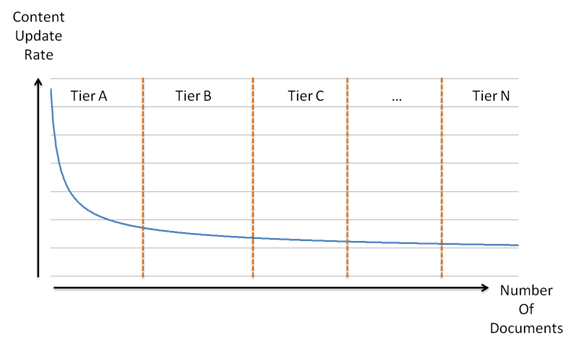

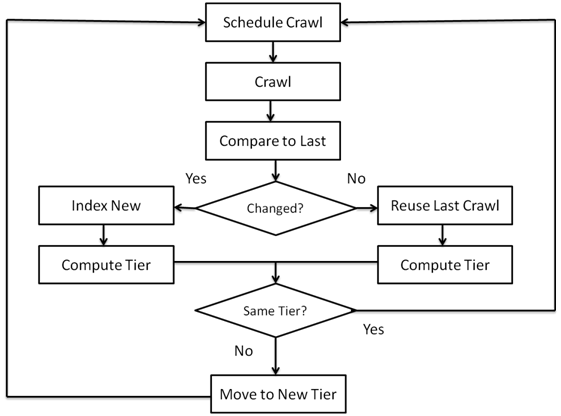

Another method for promoting fresh content, and creating an infrastructure that allows for freshness, is by creating systems that suppress stale content, while also moving resources away from managing it.

Search engine crawlers function in a way to minimize the probability of stale content being used by the search engines. This is an iterative process that starts off by the crawler crawling at different intervals and learning a best crawl rate. This becomes the document’s web crawl interval.

This iterative process is repeated and adjusts the document’s crawl interval.

There are both critical and non-critical content changes. Similar to the computation of a document’s UA score, mentioned earlier in this post, search engines will apply a different weight to different elements of a document. This iterative process will ignore non-critical changes to a document.

This type of process, in combination with updates like Caffeine, allows search engines to prioritize crawl and computation, therefore speeding up the process of indexing and calculating raking scores. A check like a checksum or diff files can quickly determine if a document has changed, and if it has, it can be analyzed further. This allows search engines to build a multi-tier database for indexing documents.

Other items, such as PageRank and SERPs CTR, can be used in addition to tier scores to determine crawl frequency. This can also be used in addition to other types of analysis mentioned earlier in the post.

The powerful thing about this process is that it opens up resources to allow faster indexation of fresh documents.

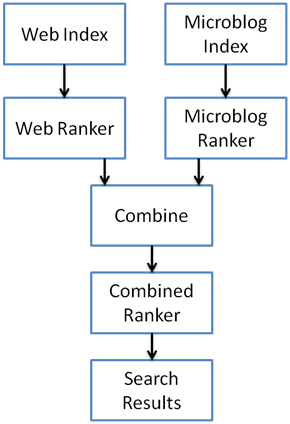

Using Microblog Data (Social)

Another interesting paper, which I won’t read into too much, is from Yahoo! on Ranking of Search Results Based on Microblog Data. It’s a nice paper to consider, since microblogging is certainly a factor in determining relevancy of fresh content.

Google was quoted in the Search Engine Land article:

“Often times when there’s breaking news, microblogs are the first to publish. We’re able to show results for recent events or hot topics within minutes of the page being indexed, but we’re always looking for ways we can serve you relevant information faster and will work to continue improving”

Since Google is certainly using microblogs, and not using the Twitter Firehouse, the Yahoo! paper gives some interesting thoughts on how this might be done. Bill Slawski wrote about this paper in the last month in his post Do Search Engines Use Social Media to Discover New Topics. It’s worth reading.

One of the interesting takeaways from this paper is that it discusses using separate crawlers/indexers for web content and microblogging content. The content is treated separately and stored and ranked in a separate index.

One index is maintained for web URLs and another for microblog URLS. These URLs are then processed and ranked independently and then combined in one index before being pushed out as search results. The paper lists off a number of sites that are used to collect microblog URLs, such as Twitter, Myspace, LinkedIn, Tumblr, etc. It goes on the note that this is only used in recency-sensitive queries.

It’s hard to say how valuable the information in this paper is, but academically it’s worth the read to see how search engines may approach ranking content based off microblogging data.

Triggering The Recency Algorithm and Machine Learning

All of these potential methods are focused on cases in which queries have been determined to be recency-sensative. In those cases, there are a number of recency-sensitive factors that will be used in combination with traditional quality and relevancy factors to determine rankings.

One interesting theme throughout many of these papers is machine learning, such as in the paper Incorporating Recency in Network Search Using Machine Learning.

The problem to be addressed is properly determining when a phrase, or set of phrases, is recency-sensitive. This is a complicated problem, because a query may come in and out of that state. Search engines can look for changes such as query volume beyond the query mean and bursts in signals such as content mentions, inbound links, and query volume. Using these factors, a “buzziness” score can be applied to particular queries.

Machine learning allows human feedback to be entered into the algorithm. Human judges can rate the “buzziness” of incoming queries and that data is cycled back into the machine learning process. An example given is when a celebrity dies, there will be increased interest in their works, such as films, but these queries should not promote content with the same degree of “buzziness” as more specific queries, such as their name. The rating of these different queries, by humans, is put into the system to adjust and learn.

In addition to various “buzziness” factors, there is a click-buzz feature, which looks at whether a particular result is experiencing disproportionate attention relative to historical data.

An interesting quote in the paper states:

“Experiments indicate that approximately 34.6% of the collected network resources are considered to be recency-sensitive”

This quote mirrors the Google announcement:

“impacts roughly 35 percent of searches”

Few Thoughts

I’ll repeat a statement I made in my phrase based semantics post. I feel Google is improving on its ability to process data at scale, especially with machine learning. I think we’re seeing advancements pioneered in Panda applied to other hard to solve problems. Papers with theories and idea that are multiple years old seem to be making their way into search results finally. I think resource limitations were keeping them from implementing some of their solved methodologies, because they couldn’t execute some of them at scale. I think the Caffeine update, and Panda update, set the stage for a number of advances.

Thanks! 🙂

I haven’t seen a paper on that exact item, but have seen two papers that might look at similar items (not freshness papers). One talked about monitoring change in ranking over time to watch how fast a result moves up. A fast movement might mean spam. The other one looked at churn of results in the top listings as a measure of competitiveness of the term.

The idea of a large number of documents causing faster decay is really interesting. I think the first paper has some more broad discussion that I didn’t include in the post, but not sure if I remember something specifically on that.

Great stuff Justin! Props for turning around such a quality post on such short notice. I really didn’t expect to find something so in depth this soon after the “freshness” update. Especially enjoyed the sections on proportional document change, as I think that played a large role in Panda with regards to “quality” content.

How you were able to crank out such an in-depth and insightful post on the Freshness update I’ll never know, but wow, this is phenomenal stuff. What you cover here is exactly what I wanted to understand about the change. Greatly appreciate this.

Impressive work Justin! It will be interesting to see which sectors are impacted – particularly if it does not break down by sector and instead by type of content. You would have thought e-commerce sites would only really be affected at product level. But if you have a site that may have ‘evergreen’ products, yet a blog that they update infrequently – perhaps you would see the main site remain unaffected and the blog posts severely affected?

It certainly makes a frequently updated blog even more of a must-have, as blog posts are so good for new links as people are always looking for the latest sources (a great example – this blog post!)

What I like about this update that they seem to be trying to identify user intent and adjust the results accordingly. Perhaps the next update will take this one stage further and finally deal with exact match domains??

Totally agree with this:

“What I like about this update that they seem to be trying to identify user intent and adjust the results accordingly”

I agree that Google seems to be able to cope which this freshness load since updates like Caffeine and by introducing something like stalerank.

On my own sites I noticed that Google (of course) takes its time to include fresh content in the SERPs when the site hasn’t been updated recently. This fact and the new Caffeine power (together with some leftovers since they don’t have to deal with Twitter data so much) plays well with the idea that Google will get smarter about interpreting content as humans would do.

Feedback about your writing: It read really well after I increased font size. So in the spirit of please consider font-size so your text gets more readable 😉

Great post and very insightful. I like your coined phrase FreshRank and it certainly makes a strong case for this type of metric to be used for ranking.

Thanks for the great clarification. Do we really need to follow everything what Google ask? I don’t think so. As long we keep our site or blog relevant in our niche with great content I think we willl be OK.

Justin, only you can write such an epic post.

Well, frankly speaking, I’m not into that much in Google Patents & other things & also sometimes find it hard to read those tables & graphs.

I think I need to read it again. This is not first time understandable to any newbie like me. Just wanted to know can you please explain in few sentence how google will diplay their searches related to their freshness algorithm.

P.S Thanks for taking time your time & reading that Google Patents for 6 hrs & writing this sophisticated article.

Ciao.

Wow man, that was a really dope article! Sincerely appreciate the insight/clarification. I’m a visual learner, so your graphs and diagrams really made this article easy to follow and understand.

Great article, thanks for clarify Google freshness i will follow your recommendation. Thank you.

This is a great article! Thanks for the analysis. I always thought that seo articles should be more like this, an analysis trying to figure out what and how search engine can actually put the concepts into practice. After all, natural language processing is very hard and resource consuming, so search engines have to come up with other ways of quickly analyze relevance. Your approach is great, and seomoz should learn from you.

Interesting article and some very useful insights there, definitely worth a read. Thanks Justin for reading all those papers so that I didn’t have too 😉

Such a great post Justin. It’s a high level read and I was impressed at how much research you did to provide us with this information. Becoming a content publisher, and a good one at that, will be increasingly important for many small brands and businesses.

This is a great article. The fact that you have a scientifically approach to SEO is priceless these days. The more formulas the better. Even if math or programming is not our specialty we need to at least aproximate the results by looking at formulas from Google patents instead of listening to Matt Cutts tweets.

I bealive this aproach will give you far better results in SEO.

Excellent blog post that adds a bit of clarity to the update – great graphs and diagrams too.

Although I’ve already seen a few changes in the UK SERP’s, I’m intrigued to see how much of an impact this will have going forward and the role that social will play.

Good article..esp they way things have been presented…

I also used this to explain to my team..and they too loved it.

Hi Justin, just found your website after I read an article published by Cyrus on SEOmoz which contained a link to this article. Glad I found it and directly bookmarked it for my weekly read :-). The freshness update intrigues me and this article really helped me in finding answers. Thx for sharing this! Grtz, Mark

Thanks a lot for the article!!!

I have to agree, that a scientific approach isn´t something you see that often. I myself am a fan of numbers and formulas, there should be more out there for SEO.

Also I think having more fresh content in the upper ranks is quite good, I remember the time when I wrote my thesis and just got four or more year old articles and so on, on the first SERPs.